THE COMPUTER

REVOLUTION

by Myron Berger

Myron Berger writes about consumer electronics for a variety of newspapers and magazines.

Whatever the fiscal excesses of the Washington bureaucracy, there would be no computer revolution today were it not for government spending. Since 1890, beginning with the automation of U.S. Census tabulating by the firm that would later become IBM, our government has supported the research and development of data processing equipment. And in the closing years of World War II, while private industry was simply not willing to invest the necessary time and money in research and development, the granddaddy of the computer we know today was created by civilian scientists working on government-sponsored projects.

Uncle Sam is probably more responsible for the development of digital electronic computers than anyone else, yet his involvement has been, in a sense, distant. Government scientists working in government laboratories made few advances in computer technology; rather, the pattern was to assemble teams of academic scientists at important universities (M. I. T., the Institute for Advanced Studies at Princeton, the Moore School of the University of Pennsylvania, and the University of Illinois were the early centers of computer projects) and give them an assignment and tax dollars to work with.

Uncle Sam has also contributed to computer development by fiat. An unfortunate fact of life in the computer world is the lack of standardization. Although this is changing somewhat, traditionally neither hardware nor software could be transferred from one brand to another-and in some cases not even from one model to another by the same maker. To keep its own house in order, the government was forced to tell its contractors that they must use a certain format. Since Uncle Sam is a primary customer for many companies, this particular format has overlapped into business procedures not involving the government.

Interestingly, with all the federal involvement in computers, boondoggles have been rare. Since so much of computer science is still virgin territory, exploration of the frontier cannot help but yield benefits later if not sooner. And though government, particularly the Pentagon, has a long history of injudicious spending, the funding of computer research stands as one of the most remarkable examples of fiscal efficiency in modern times.

The ENIAC Experiment

Take, for example, the case of the legendary ENIAC. In early 1942 the Moore School of Engineering contributed to the war effort by calculating firing tables to determine trajectories of explosive shells or projectiles, given such factors as velocity, air resistance and angle of fire. This activity was carried out primarily by young women using electrically powered mechanical calculators. A year later John Mauchly, a faculty member at Moore, came up with an idea for a computer that would calculate trajectories much faster by using vacuum tubes. Since the device would be programmable, he maintained, it could perform other tasks.

Project director Herman Goldstine, a young Army lieutenant with a doctorate in mathematics, saw the value of such a machine, and on April 2, 1943, Mauchly, J. Presper Eckert and J. G. Brainerd (two other professors at Moore) submitted their proposal for an "electronic differential analyzer" to the Ballistic Research Laboratory at the Aberdeen Proving Grounds in Maryland. A paltry $100,000 was the original cost estimate for what would become ENIAC (Electronic Numerical Integrator and Computer).

Between start-up in June 1943 and completion in 1946, however, some $500,000 in government funds was actually spent on the project. According to Arthur W Burks, one of the inventors of ENIAC, this included $100,000 to $200,000 for disassembling, transporting, reassembling, testing and debugging during the move from Moore to Aberdeen. Burks estimates that the building costs totaled only about $300,000, or about $3 million in current dollars. (A full professor's salary in those days, he points out, was about $6,000 per year.)

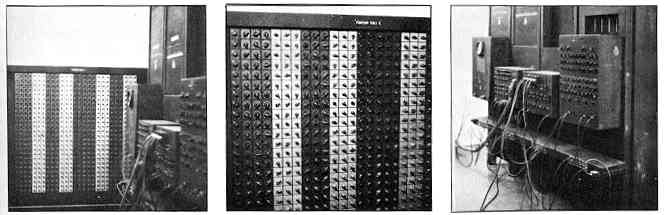

ENIAC was quite a machine. Made up of forty panels, each two feet wide and four feet deep, it could store a total of 700 bits in RAM and 20,000 bits in ROM. This was accomplished not with the integrated circuit boards or microchips of today, but with a massive network of 18,000 vacuum tubes. The AtanoffBerry computer, ENIAC's direct progenitor, could only solve sets of simultaneous equations and do that at 60 pulses per second. ENIAC, on the other hand, could handle a variety of problems (its programmers had to manually reset switches and cable links, a process that could take two days) and operated at the then amazing speed of 100,000 pulses per second. By comparison, today's home computers generally operate at between two and four million pulses per second, and powerful supercomputers have achieved speeds of over one billion per second.

It was not until December 1945, after the war had ended, that ENIAC solved its first problem: a question that dealt with the hydrogen bomb and that to this day remains classified. But even before ENIAC was up and running, more advanced computers were being developed-virtually all of them underwritten by the government. Designed by many of the men responsible for ENIAC, these machines introduced an improvement so important that they have become known by the name "stored program" computers. Unlike ENIAC, whose cables had to be reconfigured by hand, these computers were programmed electronically.

The second computer generation is divided into the EDVAC (Electronic Discrete Variable Computer) and IAS (Institute for Advanced Studies) families. The former (including EDVAC, EDVAC and UNIVAC 1) were designed primarily by Eckert and Mauchly; the latter (IAS, Whirlwind and ILLIAC), by John von Neumann and others at Princeton's IAS. While many of the early machines were built with military appropriations, their purpose was not always directly related to waging war. The IAS computer, for example, received funding from the Navy to work out a numerical meteorology system.

Uncle Sam's Offspring

Meanwhile, Eckert and Mauchly decided that computers could enjoy an

even more promising future in the business world. In October 1946 the

two men formed the Electronic Control Corporation, probably the first

commercial computer company, and in 1947 changed its name to

Eckert-Mauchly Computer Corporation. In 1950, having

received no computer orders other than Uncle Sam's, they sold the

company to Remington Rand, a manufacturer of office equipment. (In 1955

that company merged with Sperry Corporation to form Sperry-Rand. )

Before the sale, Eckert and Mauchly had been at work

on what was to

become the first commercial computer: UNIVAC I. Credited with having

introduced the first program compiler, the first programming tools and

the first high-speed printer, UNIVAC (Universal Automatic Computer) was

so significant in the early commercial computer industry that until the

1970s Sperry used its name as the official title of its computer

division.

Like its predecessors, UNIVAC resulted from a

government contract. In

1949 machines were ordered for the Census Bureau, the Air Force and the

Army's Map Unit at a total cost of $4 to $5 million. In June 1951 the

first UNIVAC was delivered to the Census Bureau, and in 1954 the first

commercial model was sold to the General Electric Company. Although

government dollars were not the sole source of income for the first

commercial computer company, those early sales of UNIVAC I helped it

reach the firm financial footing necessary to become a viable

enterprise.

In the 1950s Uncle Sam was involved in financing

research and

development, helping the early companies survive the period before

computers became accepted in the business community as valuable tools

that justified a multimillion-dollar price. Then, around 1960, Uncle

Sam abandoned his role as the primary customer of the computer

companies and became instead the standards-setter. One of the most

famous examples is the computer programming language called COBOL

(COmmon Business-Oriented Language). Although the Department of Defense

had "no significant role in its funding or development," according to

one Pentagon official, the Navy adopted the language as a standard in

the late sixties and "encouraged private industry to use it." COBOL is

now the standard language for business computers in the Defense

Department and is widely used by private industry.

ENIAC TODAY: R.I.P. Four steps up and just to the right inside the entrance of the Moore School in Philadelphia, there is a plain paper printout taped to the outside of a glass door. "To ENIAC," it says, and whoever did the computer printout message arranged it so the letters came out in Gothic script. The door is always open. The antique inside is huge, monstrous and bulky, with connecting cables the size of a man's forearm and data entering boards taller and broader than a Philadelphia 76er starting center. There it is, by golly, the first electronic computer. The daddy of them all: Apples and IBM PCs and Commodore 64s. It doesn't work, of course. The room that houses ENIAC-what's left of it, anyway-is a kind of pass-through for students on their way to the electrical engineering labs deeper in the building. Two doors, one window, gray linoleum tile on the floor. Hundreds go by every day, but no one gives ENIAC a passing glance. It's not that students have no romance anymore (though a good argument could be made in this respect); it's that the machine is basically boring now. It doesn't do anything. It's not pretty. And God knows it weighs too much to try and move it around. It just sits there like the blackened body of some long-dead warrior, prepared for a battle that was already over when the moment came. If ENIAC were turned on today-and presumably it could be, though some of its parts are at the Computer Museum in Boston and the Smithsonian Institution in Washington, D.C.-it would be only slightly slower and somewhat dumber than a handheld scientific calculator from Texas Instruments or Hewlett-Packard. But no one is interested in turning it on. Efforts by the University of Pennsylvania to raise money so ENIAC can be preserved in a special showroom for the public, along with a documented display of its history, have met with complete disinterest. It is big, black and ugly. And like the textile mills that started the industrial revolution, like the squash courts at the University of Chicago where the nuclear age began, it will gradually deteriorate and become a nuisance where it stands-eventually to be torn down to make room for something newer, cleaner and pleasanter to look upon. Which is probably as it should be. Because ENIAC was an idea whose time had come. The form itself means nothing anymore. BOB SCHWABACH |

Pentagon Progeny

The Pentagon is currently trying to repeat its COBOL success with the new programming language called Ada. Unlike COBOL, Ada was contracted out to a private company (Cibul, a French division of Honeywell) by the Department of Defense. And unlike the names of most computer vocabularies, Ada is not an acronym but the given name of Lady Lovelace, the first computer programmer.

In the early 1970s the Pentagon decided that software costs were getting out of hand (before the decade was out, costs had escalated to $3 billion a year) primarily because of the lack of standardization (a single program might have to be purchased in several versions in order to run on different computers within DOD). A High Order Language Working Group, formed to evaluate the existing languages, concluded that none was sophisticated enough and insisted that a new language be written. The goal was nothing less than the creation of a computer language that would become "the American national standard." Questionnaires asking for suggestions and desired features of a universal language were sent to some 900 individuals and companies.

To date, about $18.5 million has been spent on Ada. Hoping to have all its new computer programs written in Ada by 1987, the Defense Department has formed a group called Software Technology for Adaptable Reliable Systems (STARS) to promote and develop use of Ada in all Pentagon applications and has submitted a request for $222 million to fund the group between fiscal 1984 and 1988. In explaining the size of the request, a Pentagon spokesman said: "Most computer languages have a life cycle of twenty-five years, and we expect Ada to be useful until 2010. We also expect it to save many hundreds of millions of dollars."

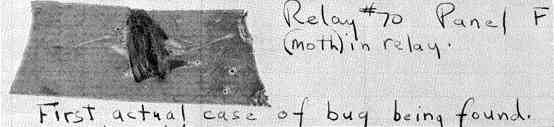

THE ORIGINAL BUG While no one has ever seen a gremlin (except in the movies), the bug is a pest of another color. In the Naval Museum at Dahlgren, Virginia, on a page of the logbook maintained by Grace Hopper while she worked on the Mark II computer, is preserved the original computer bug. This unlucky moth had been crushed to death in one of the relays within the giant electromechanical computer, bringing the machine's operations to a halt. When she discovered the cause of the malfunction, Captain Hopper carefully removed the historic carcass, taped it to the log and noted: "First actual case of bug being found." |

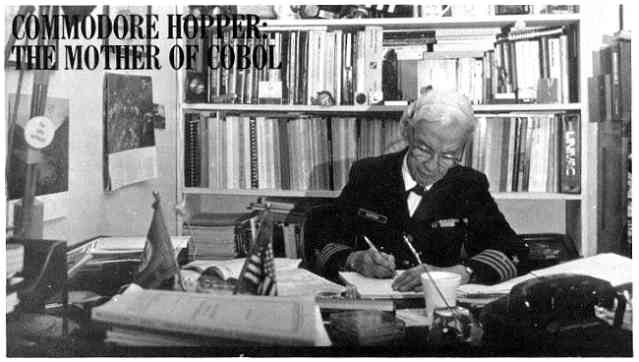

She was the third programmer on the first digital computer. She wrote the first compiler software, in effect creating the whole field of high-level programming languages. She helped preserve the first computer "bug." She was also the moving force behind COBOL, for years the standard computer language for business applications. At age seventy-seven Grace Murray Hopper was already the Navy's oldest active officer when she was named the nation's only living commodore in 1983. On the road over three hundred days a year, she still moves with the resolute energy she exhibited in the days of the first electromechanical computer. With her white hair and steel-rimmed glasses, she might remind you of your grandmother-if your grandmother has military posture and a crisp Navy uniform. One of her talents is the ability to cut through red tape. Twenty-five years ago she called in executives of all the major computer manufacturers, along with top-ranking officers and senior civilian officials from the intelligence community, the Bureau of Standards and the Defense Department, and explained that it just wouldn't work to have everybody off writing in different computer languages. The scientists had FORTRAN, but computers wouldn't move into business applications until there was a standard business language. The result of that crusade was COBOL. Ironically, though she was the U.S. government's senior computer expert, Hopper was a civilian and was backed by no federal funding at all. When World War II broke out, Grace Hopper was a mathematics teacher at Vassar. In 1943 she enlisted in the U.S. Naval Reserve and was assigned to the Bureau of Ordnance Computation Project at Harvard, where she worked under Howard Aiken during the development of the Mark I. It was with this pre-electronic, electromechanical ballistics computer that she started her programming career. "You could walk around inside her," Hopper recalls. The Commodore refers to all computers as "she," in proper naval tradition, and never fails to remind audiences that the first computer was invented by the Navy-despite the "tendency of a certain junior service, which wasn't even born then, to take credit for early computers." When the war was over, Hopper was told that at age forty she was too old for the regular Navy. Remaining in the reserves, she signed on to the Harvard faculty and in 1949 joined the inventors of ENIAC, the first electronic digital computer, at the Eckert-Mauchly Computer Corporation. Here, during the development of UNIVAC, the first commercial computer, she wrote the first compiler software. She stayed on as staff scientist for systems programming until after the merger with Sperry Corporation and the COBOL crusade, when she was drawn back into the Navy. Once in 1966 the Navy tried to retire her, an occasion she recalls as "the saddest day of my life." Four months later she was asked to return to take charge of standardizing the Navy's use of high-level programming languages. She reported for temporary active duty in 1967, and she hasn't let the retirement folks catch up with her since. Her determination to shake things up permeates everything she says, whether lecturing on the history of computers or talking about problems facing contemporary systems designers. She likes to tell audiences of computer professionals: "If during the next twelve months any one of you says, `But we've always done it this way,' I will instantly materialize in front of you and I will haunt you for twenty-four hours." HOWARD RHEINGOLD |

| NATIONAL SCIENCE FUNDS Some of the major contributions to Uncle Sam's computer research come from the civilian-oriented National Science Foundation. According to its charter, the NSF is authorized to "foster and support the development and use of computer and other scientific methods and technologies, primarily for research and education in the sciences." Spurred on by the 1957 launching of the Russian satellite Sputnik, the NSF began funding mainframe computer installations on university campuses. Though this program wound down in the 1970s, the students and university scientists trained on these systems went on to develop advances of their own in the field of computer science. NSF also organized the National Center for Atmospheric Research as an intellectual focal point for atmospheric and oceanographic scientists. NCAR features two Cray-1A supercomputers used for extensive modeling and data analysis as well as storage and data collection. The end results can be seen as the computerized weather maps in daily TV forecasts. More recently, grants from NSF have laid the groundwork for educational software firms in the personal computer field. Among the recipients is the Learning Company, Ann Piestrup's pioneering effort. In 1984 NSF's computer science budget amounted to nearly $17 million-over a third of its total budget for the year. On the agenda for the late 1980s is a major effort to make supercomputers more accessible to individual researchers in need of such powerful systems. This will be made possible by NSF financial support for computational mathematics and hardware design in addition to basic research in applications of supercomputers. |

Although it is still very early in the scheme of things for Ada to be used by the public/commercial sector, this is, in fact, already happening. A very simple version is available for home computers, and Intellimac, a high-tech company that does business with the Pentagon and supplies computer programs to commercial clients, has already used Ada to write applications programs for private corporations to perform payroll, inventory management and other conventional data processing.

In between ENIAC and Ada were a number of other computer developments that came either directly or indirectly from government funds. Dr. J. C. R. Licklider, a professor of computer science at M.I.T., oversaw much of the "action" while serving as director of the Information Processing Techniques section of the Defense Advanced Research Project Agency (DARPA). One of the most significant technologies developed by the agency was in the area of time sharing, the practice of linking two or more computers so that data can be transferred and processed between them. The Compatible Time-Sharing System (CTSS) was, in his words, "the first large time-sharing system and possibly the first of any size."

CTSS was developed between 1960 and 1964 and was the main effort of Project MAC (for MachineAided Cognition, but also known within the group as Machine-Augmented Confusion or Men and Computers). Time sharing is behind the hundreds of private and commercial data bases now in operation, and Licklider holds that most microcomputers today that use time sharing have many elements of CTSS.

When CTSS was completed, MAC turned its attentions to another project: Multics (Multiplexed Information and Computation System), which took five years to perfect and culminated in a multi-user operating system. According to Licklider, Multics was the basis of several of the features found in UNIX, the multi-user operating system developed by Bell Labs and quite popular today.

In 1968 DARPA began work on what was to become ARPANET, the first packet-switching network, at least part of which was operational by 1969. This, says Licklider, led to a mushrooming of computer networks, most of which, then and now, still use ARPANET technologies with modified protocols. ARPANET is still in operation and is the basis for the Defense Data Network (DDN), a general-purpose computer communications network designed for message traffic, file transfers and other applications.

More exotic computer technology has been pioneered by the supersecret National Security Agency (NSA). Charged with sensitive counterintelligence activities like monitoring international and domestic communications traffic, by 1962 NSA had what one of its officials called "the world's largest computing system." Among NSA's developments were high-density memory storage, supercomputers (the first Cray-1s were delivered to the agency) and computer-assisted translation, allowing machines to recognize, transcribe and translate voice communications. Currently under investigation is the promising area of optical computing technology for high-speed information processing. (On the other hand, the NSA has been perceived as hindering efforts in certain areas of computer science with its policy of reviewing and even putting a "classified" label on civilian research in cryptography.)

There were many other contributions to computer science and electronic technology wrought by Uncle Sam's (read: taxpayers') dollars. The National Aeronautics and Space Administration (NASA) alone was responsible for much of the miniaturization of components and low-power systems that made home computers a reality. Considering that the same U.S. government also brought us nuclear, biological and chemical weapons, killer satellites and the Vietnam war, perhaps the computer contributions merely serve to help even up the balance sheet.

Government-funded

computers have made it possible for humans to travel

into outer space and set foot on the moon. From the dawn of the space

age, these machines have handled guidance for every leap into the void,

keeping track of all the calculations and countless bits of data

essential to successful missions. This is surely the best example of an

activity that could not have been accomplished by human brainpower

alone.

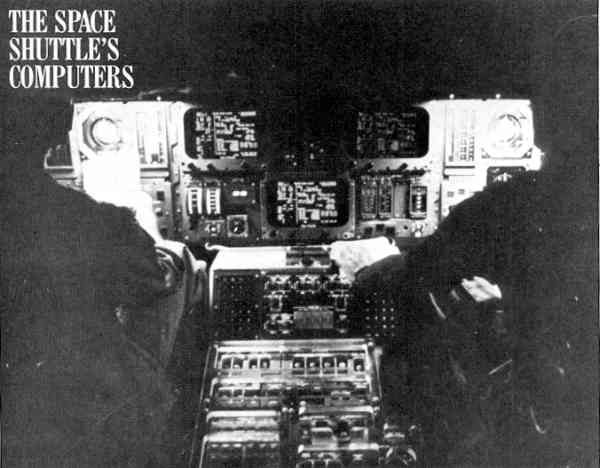

The most celebrated computers, those on NASA's space

shuttle, have

gained notoriety as much for their ill-timed malfunctions as for their

efficient shepherding of the spaceship's flight crews. Each shuttle

carries five 110-pound suitcase-size computers, modified from standard

IBM machines originally developed for use in military aircraft. All of

the spaceship's ascent operations and much of its flight are controlled

by four principal computers running in tandem to check on each other;

if they fail to agree, the backup computer is brought in to arbitrate.

Sensors throughout the craft report to the computers on the status of

life support, propulsion and navigation systems. The crew can input

commands with a series of three-digit codes.

Space shuttle computers have acted up from the very

first test landing.

In July 1977 one of the on-board computers failed as the craft was

released from a Boeing 747 that had carried it into the stratosphere.

During the initial attempt to launch the shuttle Columbia in April

1981, a timing fault disrupted communications between the main

computers and the backup system, causing a two-day delay in lift-off.

In November 1981 Columbia's second launch was disrupted by erroneous

reports to its computers of low pressure in the craft's oxygen tanks,

forcing a postponement of over a week. While in space during its

December 1983 mission, two of Columbia's computers shut down and one

would not restart; the failure was traced to metal particles, as small

as a thousandth of an inch, that had gotten into their electronic

components. The space shuttle Discovery's maiden voyage in June 1984

was delayed by its malfunctioning backup computer; a day later the

mission was scrubbed when the computers shut down the main rocket's

engines just six seconds before launch.

Despite such problems, the space shuttle's

standardized computers are

considered an improvement over the expensive custom-made systems used

earlier in such projects as the Apollo moon missions. Of late, civilian

computers have made their way on board: beginning with the shuttle's

ninth mission, an off-the-shelf Grid office computer has been used to

display the craft's position above the earth. Apple Its have also been

used to monitor experiments in the shuttle's payload.

Return to Table of Contents | Previous Article | Next Article